Some years ago...More than a decade ago, while beginning my PhD, I experimented with the design of a process for evolving stories, through a structured participatory process. The thought was that this could lead to the development of better project designs. A project design should include a theory-of-change, and a theory-of-change when spelled out in detail can be seen as a story. But there could be many different versions of that story, some better than others. If so, then how to discover them?

One possibility was to make use of a Darwinian evolutionary process to search for solutions that have the best fit with their environment. The core of the evolutionary process is the evolutionary algorithm: the re-iteration of processes of variation, selection and retention. The intention was to design a social process that embodied these features. A similar process was later built in as a core feature of the Most Significant Changes (MSC) technique.

I tested the idea out, in a simple and light hearted way, by involving a classroom of secondary students taught by a friend of mine. The environment in which stories would have to develop and survive was that classroom, with its own culture and history. More serious applications could involve the staff of an organisation, and the environment within and around that organisation.

The process:

- I gave ten of the students some small filing cards, and asked them each to write the beginning of a story on their card, about a student who left school at the end of the year. When completed, these ten cards were then posted, as a column of cards, on the left side of the blackboard, in front of the class. This provided some initial variation

- I then asked the same students to read all ten cards on the board, and for each of them to identify the story beginning they most liked. This involved selection

- The students were then asked to each use a second card to write a continuation of the one story beginning they most liked. These story segments were then posted next to the one story beginning they most liked. As a result, some stories beginnings gained multiple new segments, others none. This step involved retention of the selected story beginnings, and introduction of further variation.

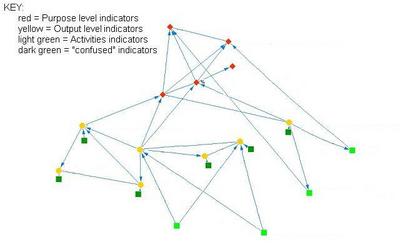

- The students were then asked to look at all the stories again, now they had been extended. I then asked them to write a third generation story segment, which they were to add to the emerging storyline they most liked so far. This process was re-iterated for four generations, until we ran out of class time. A graphic view of the results is shown below (the other being the text of the stories).

(left click to magnify image)

Each story segment is represented by one node (circle). Lines connecting the nodes, show which story segment was added to which, forming storylines. In the diagram above the story lines start from the centre and grow outwards. The color of each node represents the identity of the student who wrote that story segment. The size of each node varies according to how many "descendants" it had: how many other story segments were added to it later on. The four concentric circles in the background represent the four generations of the process. PS: Each story segment was only one to three sentences long.

The results:

In evolutionary theory success is defined in minimalist terms, as

survival and

proliferation. In this exercise three of the initial stories did not

survive beyond the first generation (1.7, 1.8, and 1.9). Five others did

survive until the fourth generation. Of these two were most

prolific (1.6, 1.10

), each of which had three descendants by the fourth generation.

Amongst the surviving storylines some were more collective constructions than others. Storylines 1.3 to 1.34, 1.10 to 1.39 and 1.10 to 1.38 had four different contributors (the maximum possible), whereas storylines 1.6 to 1.37 and 1.10 to 1.40 only had two.

As well as analysing the success of different storylines, we can also analyse success at the level of individual participants, using the responses of others as a measure. Individuals varied in the extent to which their story segments were selected by others, and continued by them. One participant's story segments had five continuations by others (see pale brown nodes). At the other extreme, none of the story segments of another participant (see dark green node) were continued by others. Before the exercise I had expected students to favor their own storylines. But as can be seen from the colored nodes in the diagram, this did not happen on a large scale. Some favored their own stories, but most changed storylines at one stage or another.

PS: The results of the process are also amenable to social network analysis. Participants can be seen as linked to each other through their choices of whose stories to select and add on to. It may be useful to test whether there are any coalitions at work. Either those expected prior to the exercise, or ones which were unexpected but important to know about. Within the school students exercise a social network analysis highlighted the presence of one clique of three student, where each added to each other's stories. But two of the students in this clique also added to others stories, and others added to theirs. See network diagram here.

Variations on the process

There are a number of ways in which this process could be varied:

- Vary the extent to which the process facilitator tries to influence the process of evolution . The facilitator could ask all participants to start from one common story beginning in the centre. During the process the facilitators could also introduce events that all storylines must make reference to in one way or another. The facilitator could also choose to specify the some desired characteristics of the end of the story. PS: We could see the facilitator as a representative of the wider / external environment.

- Run the process for a longer period. If there were ten generations, or more, it might be possible to find storylines that were built by the contributions of all ten participants. In the wider context it might be of value to find stories that have more collective ownership.

- Allow participants to add two new story segments each, rather than only one. This would increase the amount of variation within the process. But it would also make the process more time consuming. It could be a useful temporary measure to create more variation amongst the stories.

- Limit participation in the process to those whose (initial)storylines had survived so far. This would increase the selection pressure within the process. It could bring the process of evolution to an end (i.e one story remaining).

- Magnify parts of the process. Take two consecutive segments in a story, and re-run the process to start from the first segment, with the aim of reaching the other segment by the n’th generation.

- Introduce a final summary process. At the desired end time ask each participant to priority rank all the surviving storylines. These judgments could then be aggregated to provide a final score for each storyline. (Normally evolutionary processes go on and on, with different “species” emerging and dying out along the way).

How could this process be used for project development purposes?

It could be used at different stages of a project, during planning, implementation or evaluation. At the planning stage it would help think through different scenarios that the project might have to deal with. At the evaluation stage it might provide different versions of the project history, for external evaluators to look at. During implementation it could provide a mix of both scenario analysis and interpretation of history.

The mix of stakeholders involved in the process could be varied, in different ways:

- The participants could be relatively homogenous (e.g. all from same organisation) or more heterogeneous (e.g. from a range of organisations), according to the amount of diversity of storylines that was desired.

- The results of the process generated by one set of stakeholders (e.g. an NGO) could be subject to selection by another (e.g. the NGO's stakeholders). Using the example above, the class teacher could have indicated their preferred storyline from amongst the 10 surviving stories generated by his students.

- It would also be possible to have separate roles for different stakeholders: with one group making the retention decisions (which storlines will be continued) and another making variation decisions (what new story segments to be added on to what storylines (already selected for continuation). The former could be a wide group of stakeholders, and the latter a much smaller group of project planners.

Participants could take on different roles

. They could act as themselves or as representatives of specific stakeholders. Responding as individuals

may allow participants to think in wider terms than when they are representing their specific stakeholder group. Stakeholder groups could participate via representatives, or as teams (each team making one collective choice about what storylines to continue, and how to do so).

A team approach might promote more thought about each step in the evolving storyline, and how the stakeholder group's collective longer term interests could be best served.

At the other extreme, participants’ contributions could be anonymous (but labeled with a pseudonym). This would allow more divergent and risky contributions that might not otherwise appear.

How is this different from scenario planning?(from

Wikipedia) "Scenario development is used in policy planning, organisational development and, generally, when organisations wish to test strategies against uncertain future developments."There are many different ways in which scenario planning is done, but it appears that there are two stages, at least: (a) identification of different scenarios that are of possible concern, (b) identification of means of responding to those scenarios.

Evolving storylines is different in that both processes are interwoven and continuous. Each new story segment is a response to the previous segment, and in turn elaborates the existing scenario (story) in a particular way. It is more adaptive.

In scenario analysis it appears that scenarios are different combinations of circumstances, each of which is seen as potentially important. Such as high inflation and high unemployment. These factors are identified first, prioritised, then used to generate varous combinations. Some of these may not be able to occur together, but others that are become the scenarios. With evolving storylines there no limit on the number or kinds of elements that can be introduced into a story, but there are limits on the number of storylines that can survive.

Scenario analysis seems to be limited to a smaller number of possible outcomes than the storyline process. This may be necessary because the response process is separated from the scenario generation process.

There is also a connection to war games, as applied to the development of corporate strategy development (See

Economist, May 31st 2007). These involve competing teams and the taking of turns, "allowing competitors not just to draw up their own strategies but to respond to the choices of others". Evolving storylines could take this process a step further, allowing teams to experiment with multiple parallel strategies. Sometimes

a portfolio of approaches may be more useful than a single strategy, not only as a way of managing risk, but also as way of matching the diversity of contexts where an organisation is working. This is especially so for organisations working in multiple countries around the world.

Requests:- If you have any plans for testing out this process please let me know. I would be happy to provide comments and suggestions: before , during or afterwards.

- I would like to develop ways of making this process work with large numbers of participants via the internet, rather than only in face to face meetings. Especially using "open source" processes that could be made freely available via Creative Commons or GNU licenses. If you have any ideas and/or capacity to help with these type of developments please left me know.

regards, rick