Background

This posting has been prompted by work I have done this year for the World Food Programme (WFP) as member of their Evaluation Methods Advisory Panel (EMAP). One task was to carry out a review, along with colleague Mike Reynolds, of the methods used in the 2023 Country Strategic Plans evaluations. You will be able to read about these, and related work, in a forthcoming report on the panel's work, which I will link to here when it becomes available.

One of the many findings of potential interest was: "there were relatively very few references to how data would be analysed, especially compared to the detailed description of data collection methods". In my own experience, this problem is widespread, found well beyond WFP. In the same report I proposed the use of what is known as the Confusion Matrix, as a general purpose analytic framework. Not as the only framework, but as one that could be used alongside more specific frameworks associated with particular intervention theories such as those derived from the social sciences.

What is a Confusion Matrix?

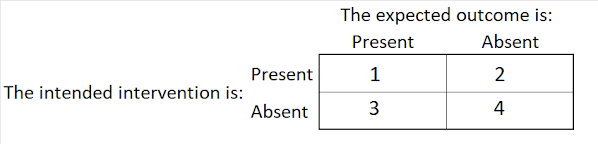

A Confusion Matrix is a type of truth table, i.e., a table representing all the logically possible combinations of two variables or characteristics. In an evaluation context these two characteristics could be the presence and absence of an intervention, and the presence and absence of an outcome. An intervention represents a specific theory (aka model), which includes a prediction that a specific type of outcome will occur if the intervention is implemented. In the 2 x 2 version you can see above, there are four types of possibilities:

- The intervention is present and the outcome is present. Cases like this are known as True Positives

- The intervention is present but the outcome is absent. Cases like this are known as False Positives.

- The intervention is absent and the outcome is absent. Cases like this are known as True Negatives

- The intervention is absent but the outcome is present. Cases like this are known as False Negatives.

The use of Confusion Matrices is most commony associated with the field of machine learning and predictive analytics, but it has much wider application. These include the fields of medical diagnostic testing, predictive maintenance, fraud detection, customer churn prediction, remote sensing and geospatial analysis, cyber security, computer vision, and natural language processing. In these applications the Confusion Matrix is populated by the number of cases falling into each of the four categories. These numbers are in turn the basis of a wide range of performance measures, which are described in detail in the Wikipedia article on the Confusion Matrix. A selection of these is described here, in this blog on the use of the EvalC3 Excel app

The claim

Although the use of a Confusion Matrix is commonly associated with quantitative analyses of performance, such as the accuracy of predictive models, it can also be a useful framework for thinking in more qualitative terms. This is a less well known and publicised use, which I elaborate on below. It is the inclusion of this wider potential use that is the basis of my claim that the Confusion Matrix can be seen as a general-purpose analytic framework.

The supporting arguments

The claim has at least four main arguments:

- The structure of the Confusion Matrix serves as a useful reminder and checklist, that at least four different kinds of cases should be sought after, when constructing and/or evaluating a claim that X (e.g. an intervention) lead to Y (e.g an outcome).

- True Positive cases, which we will usually start looking for first of all. At worst, this is all we look for.

- False Positive cases, which we are often advised to do, but often dont invest much time in actually doing so. Here we can learn what does not work and why so.

- False Negative cases, which we probably do even less often. Here we can learn what else works, and perhaps why so,

- True Negative cases, because sometimes there are asymmetric causes at play i.e not just the absence of the expected causes

- The contents of the Confusion Matrix helps us to identify interventions that are necessary, sufficient or both. This can be practically useful knowledge

- If there are no FP cases, this suggests an intervention is sufficient for the outcome to occur. The more cases we investigate , without still finding a TP, the stronger this suggestion is. But if only one FP is found, that tells us the intervention is not sufficient. Single cases can be informative. Large numbers of cases are not aways needed.

- If there are no FN cases, this suggests an intervention is necessary for the outcome to occur. The more cases we investigate , without still finding a FN, the stronger this suggestion is. But if only one FN is found, that tells us the intervention is not necessary.

- If there are no FP or FN cases, this suggests an intervention is sufficient and necessary for the outcome to occur. The more cases we investigate, without still finding a TP or FN, the stronger this suggestion is. But if only one FP, or FN is found, that tells us that the intervention is not sufficient or not necessary, respectively.

- The contents of the Confusion Matrix help us identify the type and scale of errors and their acceptability. FP and FN cases are two different types of error that have different consequences in different contexts. A brain surgeon will be looking for an intervention that has a very low FP rate, because errors in brain surgery can be fatal, so cannot be recovered. On the other hand, a stockmarket investor is likely to be looking for a more general purpose model, with few FNs. However, it only has to be right 55% of the time to still make them money. So a high rate of FPs may not be a big concern. They can recover their losses through further trading. In the field of humanitarian assistance the corresponding concerns are with coverage (reaching all those in need, i.e minimising False Negatives) and leakage (minimising inclusion of those not in need i.e False Positives). There are Confusion Matrix based performance measures for both kinds error and for the degree that both kinds of error are balanced (See the Wikipedia entry)

- The contents of the Confusion Matrix can help us identify usefull case studies for comparison purposes. These can include

- Cases which exemplify the True Positive results, where the model (e.g an intervention) correctly predicted the presence of the outcome. Look within these cases to find any likely causal mechanisms connecting the intervention and outcome. Two sub-types can be useful to compare:

- Modal cases, which represent the most common characteristics seen in this group, taking all comparable attributes into account, not just those within the prediction model.

- Outlier cases, which represent those which were most dissimilar to all other cases in this group, apart from having the same prediction model characteristics

- Cases which exemplify the False Positives, where the model incorrectly predicted the presence of the outcome.There are at least two possible explanations that can be explored:

- In the False Positive cases, there are one or more other factors that all the cases have in common, which are blocking the model configuration from working i.e. delivering the outcome

- In the True Positive cases, there are one or more other factors that all the cases have in common, which are enabling the model configuration from working i.e. delivering the outcome, but which are absent in the False Positive cases

- Note: For comparisons with TPs cases, TP and FP cases should be maximally similar in their case attributes. I think this is called MSDO (most similar, different outcome) based case selection

- Cases which exemplify the False Negatives, where the outcome occurred despite the absence the attributes of the model. There are three possibilities of interest here:

- There may be some False Negative cases that have all but one of the attributes found in the prediction model. These cases would be worth examining, in order to understand why the absence of a particular attribute that is part of the predictive model does not prevent the outcome from occurring. There may be some counter-balancing enabling factor at work, enabling the outcome.

- It is possible that some cases have been classed as FNs because they missed specific data on crucial attributes that would have otherwise classed them as TPs.

- Other cases may represent genuine alternatives, which need within-case investigation to identify the attributes that appear to make them successful

- Cases which exemplify the True Negatives, where the absence the attributes of the model is associated with the absence of the outcome.

- Normally this are seen as not being of much interest. But there may cases here with all but one of the intervention attributes. If found then the missing attribute may be viewed as:

- A necessary attribute, without which the outcome can occur

- An INUS attribute i.e. an attribute that is Insufficient but Necessary in a configuration that is Unnecessary but Sufficient for the outcome (See Befani, 2016). It would then be worth investigating how these critical attributes have their effects by doing a detailed within-case analysis of the cases with the critical missing attribute.

- Cases may become TNs for two reasons. The first, and most expected, is that the causes of positive outcomes are absent. The second, which is worth investigating, is that there are additional and different causes at work which are causing the outcome to be absent. The first of these is described as causal symmetry, the second of these is described as causal asymmetry. Because of the second possibility is worthwhile paying close attention to TN cases to identify the extent to which symmetrical causes or asymmetrical causes are at work. The findings could have significant implications for any intervention that is being designed. Here a useful comparision would be between maximally similar TP and TN cases.

.png)