This blog posting is been prompted by participation in two recent events. One was some work I was doing with the ICRC, reviewing Terms of Reference for an evaluation. The other was listening in as a participant to this week's European Investment Bank conference titled "Picking up the pace: Evaluation in a rapidly changing world".

When I was reviewing some Terms of Reference for an evaluation I noticed a gap which I have seen many times before. While there was a reasonable discussion of the types of information that would need to be gathered there was a conspicuous absence of any discussion of how that data would be analysed. My feedback included the suggestion that the Terms of Reference needed to ask the evaluation team for a description of the analytical framework they would use to analyse the data they were collecting.

The first two sessions of this week's EIB conference were on the subject of foresight and evaluation. In other words how evaluators can think more creatively and usefully about possible futures – a subject of considerable interest to me. You might notice that I've referred to futures rather than the future, intentionally emphasising the fact that there may be many different kinds of futures, and with some exceptions (e.g. climate change) is not easy to identify which of these will actually eventuate.

To be honest, I wasn't too impressed with the ideas that came up in this morning's discussion about how evaluators could pay more attention to the plurality of possible futures. On the other hand, I did feel some sympathy for the panel members who were put on the spot to answer some quite difficult questions on this topic.

Benefiting from the luxury of more time to think about this topic, I would like to make a suggestion that might be practically usable by evaluators, and worth considering by commissioners of evaluations. The suggestion is how an evaluation team could realistically give attention not just to a single "official" Theory Of Change about an intervention, but to multiple relevant Theories Of Change about an intervention and its expected outcomes. In doing so I hope to address both issues I have raised above: (a) the need for an evaluation team to have a conceptual framework structuring how it will analyse the data it collects, and (b) the need to think about more than one possible future and how that might be realised i.e. more than one Theory of Change.

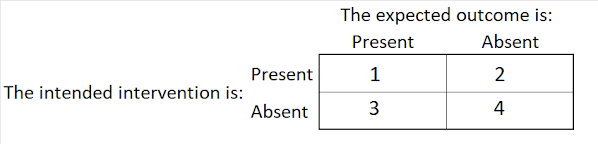

The core idea is to make use of something which I have discussed many times previously in this blog, known as the Confusion Matrix – to those involved in machine learning, and more generally described simply as a truth table - one that describes four types of possibilities. It takes the following form:

The second (2) describes what is happening when intervention is present and the expected outcome of that intervention is absent. This theory would describe what additional conditions are present, or what expected conditions are absent, which will make a difference – leading to the expected outcome being absent. When it comes to analysing data on what actually happened identifying these conditions can lead to modification of the first (1) Theory of Change such that it becomes a better predictor of the outcome and there are fewer False Positives (found in cell 2). Ideally the less False Positives the better. But from a theory development point of view there should always be some situations described in cell 2 because there will never be an all-encompassing theory that works everywhere. There will always be boundary conditions beyond which the theory is not expected to work. So an important part of an evaluation is not just to refine the theory about what works (1) but also to refine the theory of the circumstances in which it will not be expected to work (2), sometimes known as conditions or boundary conditions.